Action Recognition

Mediapipe, Tensorflow, OpenCV, PyAutoGUI

For this project, Ryan

Mapped a set of landmarks consisting of 30 frames of movement for eight different actions. Used mediapipe for the tracking motions of palm joints, the landmarks were converted into NumPy arrays which will be used for training the model.

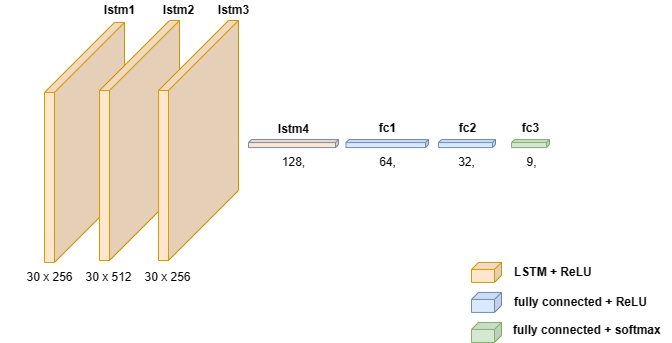

Built a model using Tensorflow, based on Long short-term memory (LSTM) layers with ReLU activation functions and dense layers with a softmax activation function on the last layer for mapping the last 30 frames of data gathered to one of eight actions.

Accuracy tests using confusion matrix stated that the model had 95% accuracy when trained on and given 30 frames of data for every prediction.

Integrated the model with OpenCV and PyAutoGUI which enables enabling/disabling mouse cursor movement controls using action recognition and landmark tracking which translates the index finger joint to mouse cursor movements.

The libraries used are Pandas, NumPy, Mediapipe, Tensorflow, and OpenCV